Before I launch into the deconstruction of the data, I need to state that I do have a potential conflict of interest here. I am very involved in the CDI research from the health services and epidemiology perspective. But equally importantly, I have received research and consulting funding from ViroPharma, the manufacturer of oral Vancocin that is used to treat severe CDI.

And here is an important piece of background information: the reason the study was done. The recent evidence-based guideline on CDI developed jointly by SHEA and IDSA recommends initial treatment with metronidazole in the case of an infection that does not meet severe criteria, while advocating the use of vancomycin for severe disease. We will get into the reasons for this recommendation below.

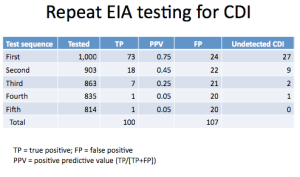

OK, with that out of the way, let us consider the information at hand.

My first contention is that this is a great example of how NOT to conduct a study (or how not to report it , or both). The study was a retrospective chart review at a single VA hospital in Chicago. All patients admitted between 1/09 and 3/10 who had tested positive for C. difficile toxin were identified and their hospitalizations records reviewed. A total of 147 patients were thus studied, of whom 25 (17%) received vancomycin and 122 (83%) metronidazole. It is worth mentioning that of the 122 initially treated with vancomycin, 28 (23%) were switched over to metronidazole treatment. The reasons for the switch as well as their outcomes remain obscure.

The treatment groups were stratified based on disease severity. Though the abstract states that severity was judged based on "temperature, white blood cell count, serum creatinine , serum albumin, acute mental status changes, systolic blood pressure<90, requirement for pressors," the thresholds for most of these variables are not stated. One can only assume that this stratification was done consistently and comported with the guideline.

Here is how the severity played out:

Nowhere can I find where those patients who were switched from metronidazole to vancomycin fell in these categories. And this is obviously important.

Now, for the outcomes. Those assessed were "need for colonoscopy, presence of pseudomembranes, adynamic ileus, recurrence within 30 days , reinfection > 30 days post therapy, number of recurrences >1, shock, megacolon, colon perforation, emergent colectomy, death." But what was reported? The only outcome to be reported in detail is recurrence in 30 days. And here is how it looks:

The other outcomes are reported merely as "M was equivalent to V irrespective of severity of illness (p=0.14). There was no difference in rate of recurrence (p= 0.41) nor in rate of complications between the groups (p=0.77)."

What the heck does this mean? Is the implication that the p-value tells the whole story? This is absurd! In addition, it does not appear to me from the abstract or the FPC report as if the authors bothered to do any adjusting for potential confounders. Granted, their minuscule sample size did not leave much room for that, but a lack of attempt alone invalidates the conclusion.

Oh, but if this were only the biggest of the problems! I'll start with what I think is the least of the threats to validity and work my way to the top of that heap, skipping much in the middle, as I do not have the time and the information available is full of holes. First, in any observational study of treatment there is a very strong possibility of confounding by indication. I have talked about this phenomenon previously here. I think of it as a clinician noticing something about the patient's severity of illness that does not manifest as a clear physiologic or laboratory sign, yet is very much present. A patient with this characteristic, although looking to us on paper much like one without a disease that is that severe, will be treated as someone at a higher threat level. In this case it may translate into treatment with vancomycin of patients who do not meet our criteria for severe disease, but nevertheless are severely ill. If present, this type of confounding blunts the observed differences between groups.

The lack of adjustment for potential confounding of any sort is a huge issue that negates any possibility of drawing a valid conclusion. Simply comparing groups based on severity of CDI does not eliminate the need to compare based on other factors that may be related to both the exposure and the outcome. This is pretty elementary. But again, this is minor compared to the fatal flaw.

And here it is, the final nail in the coffin of this study for me: sample size and superiority design. Firstly, the abstract and the write-up say nothing of what the study was powered to show. At least if this information had been available, we could make slightly more sense out of the p-values presented. But, no, this is nowhere to be found. As we all know, finding statistical significance is dependent on the effect size and variation within the population: the smaller the effect size and the greater the variation, the more subjects are needed to show a meaningful difference. Note, I said meaningful, NOT significant, and this they likewise neglect. What would be a clinically meaningful difference in the outcome(s)? Could 11% difference in recurrence rates be clinically important? I think so. But it is not statistically significant, you say! Bah-humbug, I say, go back and read all about the bunk that p-values represent!

One final issue, and this is that a superiority study is the wrong design here, in the absence of a placebo arm. In fact, the appropriate design is a non-inferiority study, with a very explicit development of valid non-inferiority margins that have to be met. It is true that a non-inferiority study may signal a superior result, but only if it is properly designed and executed, which this is not.

So, am I surprised that the study found "no differences" as supported by the p-values between the two treatments? Absolutely not. The sample size, the design and other issues touched on above preclude any meaningful conclusions being made. Yet this does not seem to stop the authors from doing exactly that, and the press from parroting them. Here is what the lead author states with aplomb:

"There is a need for a prospective, head-to-head trial of these two medications, but I’m not sure who’s going to fund that study," Dr. Saleheen said in an interview at the meeting, which was sponsored by the American Society for Microbiology. "There is a paucity of data on this topic so it’s hard to say which antibiotic is better. We’re not jumping to any conclusions. There is no fixed management. We have to individualize each patient and treat accordingly."OK, so I cannot disagree with the individualized treatment recommendation. But do we really need a "prospective head-to-head trial of these two medications"? I would say "yes," if there were not already not 1 but 2 randomized controlled trials addressing this very question. One by Zar and colleagues and another done as a regulatory study of the failed Genzyme drug tolevamer. Both of the trials contained separate arms for metronidazole and vancomycin (the Genzyme trial also had a tolevamer arm), and both stratified by disease severity. Zar and colleagues reported that in the severe CDI group the rate of clinical response was 76% in the metronidazole-treated patients versus 97% in the vancomycin group, with the p=0.02. In the tolevamer trial, presented as a poster at the 2007 ICAAC, there was an 85% clinical response rate to vancomycin and 65% to metronidazole (p=0.04).

We can always desire a better trial with better designs and different outcomes, but at some point practical considerations have to enter the equation. These are painstakingly performed studies that show a fairly convincing and consistent result. So, to put the current deeply flawed study against these findings is foolish, which is why I suspect the investigators failed to mention anything about these RCTs.

Why do I seem so incensed by this report? I am really getting impatient with both scientists and reporters for willfully misrepresenting the strength and validity of data. This makes everyone look like idiots, but more importantly such detritus clogs the gears of real science and clinical decision-making.