I recently peer-reviewed a manuscript on Clostridium difficile surveillance for a journal and was prompted to reread this paper by Lance Peterson and Ari Robicsek from the Annals of Internal Medicine August 2009. The paper is a great reminder of the high risk of false positive results from repeating a moderately sensitive yet highly specific test for a disease of low prevalence.

The case in point is C. difficile testing, of specific interest to me with the enzyme immunoassays (EIA) for toxins A and/or B. A frequent clinical dilemma is what to do when a patient has symptoms and signs suggestive of C. diff infection (CDI), yet the initial test comes back negative. Frequently the clinician will repeat testing, and possibly more than once. And on occasion, the repeat testing will yield a positive result. But how accurate is this positive result?

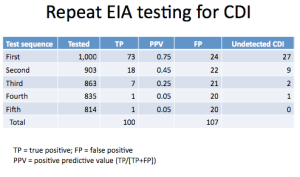

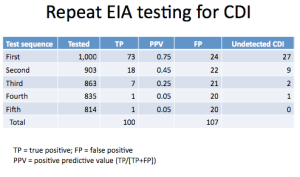

To answer this question, Peterson and Robicsek constructed a simple model, depicted in Table 2 of the paper (I have reproduced a part of it below). The authors derived EIA characteristics from the literature to be: sensitivity = 73.3%, specificity = 97.6%. They also assumed a true prevalence of disease of 10% among patients with suspected CDI. So, starting out with a hypothetical 1,000 patient cohort with suspected CDI, the results look as follows:

What comes out loud and clear is that with every round of repeat testing, the positive predictive value degrades dramatically, until the result becomes completely useless. But to go even further, the result may be harmful since we have now gone from having 1/4 of all detected positives being false to a whopping > 1/2! And now we need to think long and hard what to do with these results. Now, one other point: Merely going from a single test to a second round, while adding a substantial number of true positives (18), ends up identifying even a greater number of people without the disease as having CDI (22)!

What comes out loud and clear is that with every round of repeat testing, the positive predictive value degrades dramatically, until the result becomes completely useless. But to go even further, the result may be harmful since we have now gone from having 1/4 of all detected positives being false to a whopping > 1/2! And now we need to think long and hard what to do with these results. Now, one other point: Merely going from a single test to a second round, while adding a substantial number of true positives (18), ends up identifying even a greater number of people without the disease as having CDI (22)!

This illustrates the great potential for misclassification of CDI that we run into in surveillance studies, leading to an inflated disease estimates. The moral of the story is that we always need to know what proportion of positive tests are in fact repeat tests following a negative assay. And I am even beginning to think that we need to limit ourselves at most to two consecutive tests when talking about incidence and prevalence of CDI.

The case in point is C. difficile testing, of specific interest to me with the enzyme immunoassays (EIA) for toxins A and/or B. A frequent clinical dilemma is what to do when a patient has symptoms and signs suggestive of C. diff infection (CDI), yet the initial test comes back negative. Frequently the clinician will repeat testing, and possibly more than once. And on occasion, the repeat testing will yield a positive result. But how accurate is this positive result?

To answer this question, Peterson and Robicsek constructed a simple model, depicted in Table 2 of the paper (I have reproduced a part of it below). The authors derived EIA characteristics from the literature to be: sensitivity = 73.3%, specificity = 97.6%. They also assumed a true prevalence of disease of 10% among patients with suspected CDI. So, starting out with a hypothetical 1,000 patient cohort with suspected CDI, the results look as follows:

What comes out loud and clear is that with every round of repeat testing, the positive predictive value degrades dramatically, until the result becomes completely useless. But to go even further, the result may be harmful since we have now gone from having 1/4 of all detected positives being false to a whopping > 1/2! And now we need to think long and hard what to do with these results. Now, one other point: Merely going from a single test to a second round, while adding a substantial number of true positives (18), ends up identifying even a greater number of people without the disease as having CDI (22)!

What comes out loud and clear is that with every round of repeat testing, the positive predictive value degrades dramatically, until the result becomes completely useless. But to go even further, the result may be harmful since we have now gone from having 1/4 of all detected positives being false to a whopping > 1/2! And now we need to think long and hard what to do with these results. Now, one other point: Merely going from a single test to a second round, while adding a substantial number of true positives (18), ends up identifying even a greater number of people without the disease as having CDI (22)!This illustrates the great potential for misclassification of CDI that we run into in surveillance studies, leading to an inflated disease estimates. The moral of the story is that we always need to know what proportion of positive tests are in fact repeat tests following a negative assay. And I am even beginning to think that we need to limit ourselves at most to two consecutive tests when talking about incidence and prevalence of CDI.

Very insightful post. Physician emotions get involved in these interpretations, adding to the decision fog. Dogma overwhelms our brains at times, too. There's nothing like a reality reorientation to reign us in. Thanks.

ReplyDelete